An Adaptive Music System For Pedestrians

For my final year dissertation (which I’m excited to say is now in the process of being published as a paper), I wanted to see if I could bring something I love about video games into real life: adaptive soundtracks. The kind of music that changes and evolves with what you’re doing on screen.

Some of my favourite examples of this in gaming are:

- In The Legend of Zelda: Tears of the Kingdom, the soundtrack gradually layers more instruments as you approach a final boss fight.

- Persona 5 shifts its background music depending on the mood or weather, offering different versions for tone, rainy days, and more.

- In Super Mario Odyssey, stepping into an 8-bit zone transforms the music into a retro chiptune version.

These adaptive scores aren’t just background — they guide your attention, shape the mood, and make you feel part of the world. I wanted to try translating those ideas to real-world walking.

Requirements gathering

Before building anything, I needed to know which aspects of the environment actually matter to walkers, and how music could adapt in response to variations in those different aspects.

I ran a focus group where participants watched a first-person walking video and annotated a map with points where they thought a piece of music should change in response to changes in the environment.

We also brainstormed possible musical responses — from changing tempo to adding or removing instruments.

When I combined those ideas with what the literature already says about pedestrian experience, I landed on four key features to focus on:

- Greenery (trees, plants, parks)

- Traffic / Busyness (cars, people, general busyness)

- Pavement width (tight vs. spacious)

- Street Aesthetics (how nice a street looks)

On the music side, I decided to use instrument layering — splitting a track into stems (bass, drums, vocals, etc.) and adjusting the volume of each layer based on the environment. Thanks to AI stem-splitting tools (of which there are plenty available), this approach can be applied to almost any song, meaning this system can feasibly be applied to a user's entire music playlist.

Feasibility Study

Next, I wanted to know: can we actually detect these features in real time?

I hacked together a prototype using Meta’s Project Aria glasses, which captured live video while walking. That video was fed into an object detection model (YOLO), which counted cars and people to estimate traffic density. At the same time, I used AI-based music stem separation to split a song into layers.

As the traffic “score” increased, more layers were added, making the music richer and more intense. Previous research also showed that similar computer vision techniques could be used to capture the other three affordances: estimating greenery levels in an area, measuring path width, and, with machine learning, detecting aesthetic qualities of architecture. It was a proof-of-concept that yes, this could work with the right technology in the future.

Wizard of Oz User Study

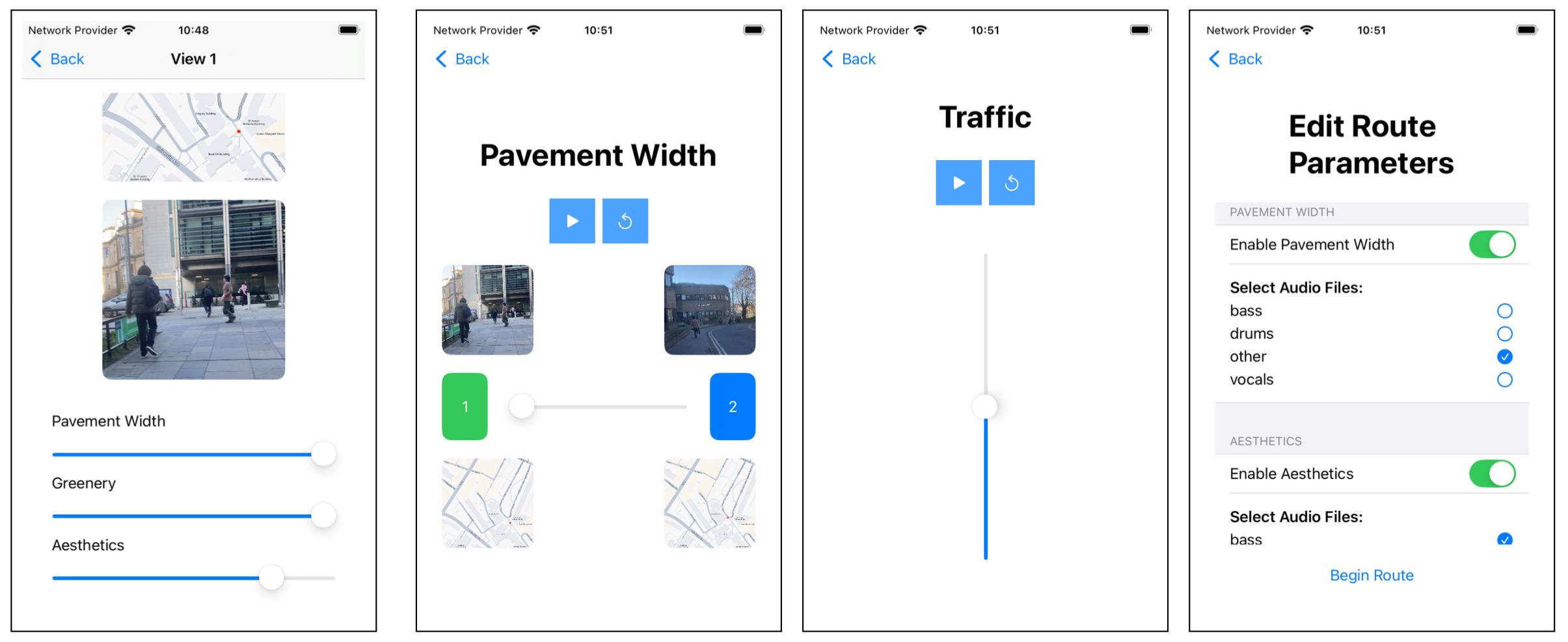

For the main evaluation, I ran a Wizard-of-Oz style user study. Instead of relying on unreliable sensig and computer vision, I built a custom native iOS Swift app that let me manually control the music in real time while walking alongside participants. This meant they got to experience a smooth “ideal case” version of the system — essentially, what it should feel like when fully automated.

The study took place along a 0.5 km university campus route that I divided into 14 segments, each chosen because something about the environment shifted noticeably — for example, the amount of greenery, how wide the pavement was, or the general aesthetic of the street. For each segment, I assigned affordance scores (0–1 scale) based on:

- Greenery – estimating the proportion of visible trees, grass, or plants

- Pavement width – measured directly on-site

- Aesthetics – rated by independent scorers who watched video clips of each segment

- Traffic density – treated as a dynamic factor, measured live by observation

In the app, static affordances (like greenery or pavement width) were tied to these pre-set scores. To create smooth transitions between segments, the app gave me a slider to interpolate between values — so as participants walked, the music could gradually shift. For traffic, I adjusted the score live using another slider, based on the density of people and cars in the immediate area.

Each participant walked the route five times: once for each single-affordance condition (greenery, traffic, pavement width, aesthetics), plus a Curated condition, where they got to choose which affordances controlled which instrument layers (e.g. vocals → traffic, bass → pavement width).

The app supported all of this by letting me:

- progress through the route segment by segment, updating scores,

- smoothly interpolate affordance values with sliders,

- and in the Curated condition, set up participants’ own mappings between environment and music.

Wrapping Up

I won’t spoil the results here (you'll have to wait for the full paper for that), but running this project let me see how much potential there is for bringing adaptive music into everyday life. Just like in games, responsive soundtracks can change how you move, notice things, and even feel in familiar spaces.

Published on: August 26, 2025